Dive Brief:

- Alphabet's autonomous driving unit Waymo updated its software over the past months after two of its ride-hailing vehicles struck a pickup truck being towed in Phoenix in December, the company announced in a blog post.

- The fleet-wide software recall followed an internal company investigation and meeting with the National Highway Traffic Safety Administration after a Dec. 11 incident in Phoenix, which resulted in minor damage to both Waymo’s vehicles. No injuries were reported.

- Waymo said its engineering team went to work immediately to understand what happened. The company said it quickly tested and validated a fix and began pushing an over-the-air software update to its entire fleet from Dec. 20 through Jan. 12.

Dive Insight:

The safety of autonomous ride-hailing vehicles operating on public roads has been under intense scrutiny after a vehicle operated by General Motors’ Cruise unit re-struck and seriously injured a pedestrian in San Francisco last fall.

Waymo said it first reported the incident to NHTSA on Dec. 15, followed by four subsequent meetings with NHTSA staff between December and February. Based on those meetings and Waymo’s internal analysis of the events, the company submitted a voluntary recall report of the vehicle software it used at the time. It filed the report on Feb. 13.

Waymo said the two vehicles involved were not carrying passengers at the time.

“This voluntary recall reflects how seriously we take our responsibility to safely deploy our technology and to transparently communicate with the public,” Waymo wrote in a blog post.

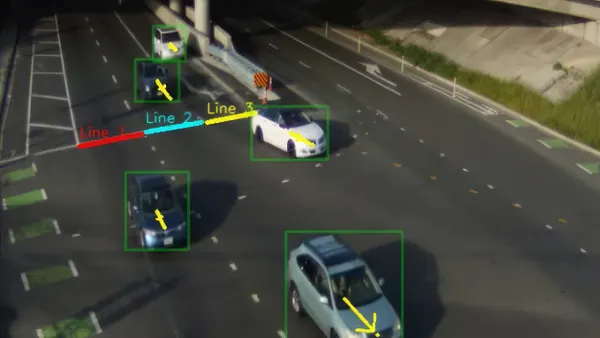

According to Waymo, one of its autonomous ride-hailing vehicles contacted a backward-facing pickup that was being towed. Waymo claims the pickup truck was towed improperly and “persistently angled across a center turn lane and a traffic lane.” In addition, the tow truck driver failed to stop after the first collision with the Waymo vehicle. Minutes later, another Waymo vehicle contacted the same pickup truck being towed.

The company referred to the minor accidents as an “unusual scenario” in its blog post.

In its blog post, Waymo described the fault as a “persistent orientation mismatch” of the towed pickup truck and tow truck combination that caused the vehicle’s autonomous driving software and hardware stack to “incorrectly predict the future motion of the vehicle being towed.”

For companies like Waymo and Cruise developing complex software for autonomous vehicles, these types of incidents are referred to as “edge cases,” which human drivers rarely, if ever, encounter.

Dealing with the unpredictability of edge cases remains a challenge for both tech companies and automakers looking to develop and scale autonomous driving technology. It’s one of the reasons that, according to S&P Global, emerging fields — such as AI, deep learning and machine learning — are being used to improve perception and decision making for autonomous vehicles to better identify other vehicles, pedestrians, cyclists and lane markings and navigate more safely.